Open-source LLM benchmark performance - AI tools

-

LLM Explorer Discover and Compare Open-Source Language Models

LLM Explorer Discover and Compare Open-Source Language ModelsLLM Explorer is a comprehensive platform for discovering, comparing, and accessing over 46,000 open-source Large Language Models (LLMs) and Small Language Models (SLMs).

- Free

-

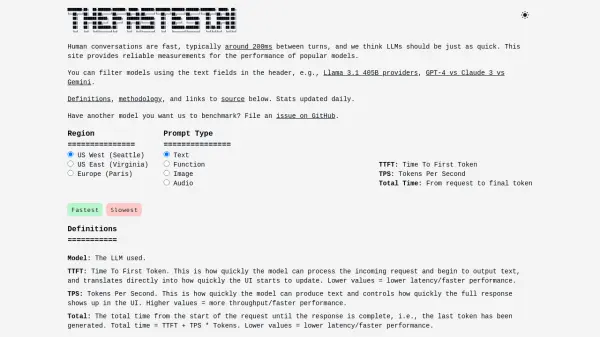

TheFastest.ai Reliable performance measurements for popular LLM models.

TheFastest.ai Reliable performance measurements for popular LLM models.TheFastest.ai provides reliable, daily updated performance benchmarks for popular Large Language Models (LLMs), measuring Time To First Token (TTFT) and Tokens Per Second (TPS) across different regions and prompt types.

- Free

-

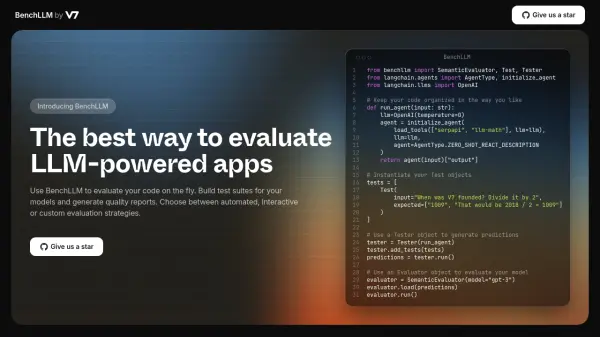

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

ModelBench No-Code LLM Evaluations

ModelBench No-Code LLM EvaluationsModelBench enables teams to rapidly deploy AI solutions with no-code LLM evaluations. It allows users to compare over 180 models, design and benchmark prompts, and trace LLM runs, accelerating AI development.

- Free Trial

- From 49$

-

Gorilla Large Language Model Connected with Massive APIs

Gorilla Large Language Model Connected with Massive APIsGorilla is an open-source Large Language Model (LLM) developed by UC Berkeley, designed to interact with a wide range of services by invoking API calls.

- Free

-

neutrino AI Multi-model AI Infrastructure for Optimal LLM Performance

neutrino AI Multi-model AI Infrastructure for Optimal LLM PerformanceNeutrino AI provides multi-model AI infrastructure to optimize Large Language Model (LLM) performance for applications. It offers tools for evaluation, intelligent routing, and observability to enhance quality, manage costs, and ensure scalability.

- Usage Based

-

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

-

OpenRouter A unified interface for LLMs

OpenRouter A unified interface for LLMsOpenRouter provides a unified interface for accessing and comparing various Large Language Models (LLMs), offering users the ability to find optimal models and pricing for their specific prompts.

- Usage Based

-

phoenix.arize.com Open-source LLM tracing and evaluation

phoenix.arize.com Open-source LLM tracing and evaluationPhoenix accelerates AI development with powerful insights, allowing seamless evaluation, experimentation, and optimization of AI applications in real time.

- Freemium

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

sales pipeline coaching software 55 tools

-

AI background remover API 27 tools

-

WordPress site management AI 39 tools

-

AI podcast preparation 42 tools

-

collaborative learning quiz tool 39 tools

-

smart gift suggestion app 29 tools

-

affordable SEO analysis tool 35 tools

-

AI math calculator 9 tools

-

AI grammar checker for websites 17 tools

Didn't find tool you were looking for?